Blog posts on technologies to make deep learning efficient, accessible, and user-friendly.

CompOFA: Compound Once-For-All Networks for Faster Multi-Platform Deployment

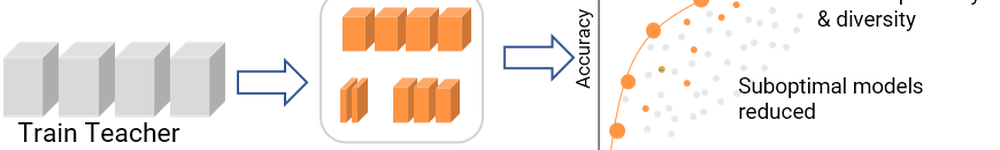

CompOFA improves the speed, cost, and usability of jointly training models for many deployment targets. By highlighting insights on model design and system deployment, we try to address an important problem for real-world usability of DNNs.

Anatomy of a High-Speed Convolution

It’s no surprise that modern deep-learning libraries have production-level, highly-optimized implementations of most operations. But just what is the black magic that these libraries use that we mere mortals don’t? What exactly does one do to “optimize” or accelerate neural networks operations?

Making Neural Nets Work With Low Precision

Deploying efficient neural nets on mobiles is becoming increasingly important. This post explores the concept of quantized inference, and how it works in TensorFlow Lite.